2.3 Properties of estimators

Before we move further, it is important to understand the terms bias, efficiency and consistency of estimates of parameters, which are directly related to LLN and CLT. Although there are strict statistical definitions of the aforementioned terms (you can easily find them in Wikipedia or anywhere else), I do not want to copy-paste them here, because there are only a couple of important points worth mentioning in our context.

Note that all the discussions in this chapter relate to the estimates of parameters, not to the distribution of a random variable itself. A common mistake that students make when studying statistics, is that they think that the properties apply to the variable \(y_t\) instead of the estimate of its parameters (e.g. mean of \(y_t\)).

2.3.1 Bias

Bias refers to the expected difference between the estimated value of parameter (on a specific sample) and the “true” one (in the true model). Having unbiased estimates of parameters is important because they should lead to more accurate forecasts (at least in theory). For example, if the estimated parameter is equal to zero, while in fact it should be 0.5, then the model would not take the provided information into account correctly and as a result will produce less accurate point forecasts and incorrect prediction intervals. In inventory context this may mean that we constantly order 100 units less than needed only because the parameter is lower than it should be.

The classical example of bias in statistics is the estimation of variance in sample. The following formula gives biased estimate of variance in sample: \[\begin{equation} \mathrm{V}(y) = \frac{1}{T} \sum_{j=1}^T \left( y_t - \bar{y} \right)^2, \tag{2.1} \end{equation}\] where \(T\) is the sample size and \(\bar{y} = \frac{1}{T} \sum_{j=1}^T y_t\) is the mean of the data. There is a lot of proofs in the literature of this issue (even Wikipedia (2020a) has one), we will not spend time on that. Instead, we will see this effect in the following simple simulation experiment:

mu <- 100

sigma <- 10

nIterations <- 1000

# Generate data from normal distribution, 10,000 observations

y <- rnorm(10000,mu,sigma)

# This is the function, which will calculate the two variances

varFunction <- function(y){

return(c(var(y), mean((y-mean(y))^2)))

}

# Calculate biased and unbiased variances for the sample of 30 observations,

# repeat nIterations times

varValues <- replicate(nIterations, varFunction(sample(y,30)))This way we have generated 1000 samples with 30 observations and calculated variances using the formulae (2.1) and the corrected one for each step. Now we can plot it in order to see how it worked out:

par(mfcol=c(1,2))

# Histogram of the biased estimate

hist(varValues[2,], xlab="V(y)", ylab="y", main="Biased estimate of V(y)")

abline(v=mean(varValues[2,]), col="red")

legend("topright",legend=TeX(paste0("E$\\left(V(y)\\right)$=",round(mean(varValues[2,]),2))),lwd=1,col="red")

# Histogram of unbiased estimate

hist(varValues[1,], xlab="V(y)", ylab="y", main="Unbiased estimate of V(y)")

abline(v=mean(varValues[1,]), col="red")

legend("topright",legend=TeX(paste0("E$\\left(V(y)\\right)$=",round(mean(varValues[1,]),2))),lwd=1,col="red")

Figure 2.20: Histograms for biased and unbiased estimates of variance.

Every run of this experiment will produce different plots, but typically what we will see is that, the biased estimate of variance (the histogram on the right hand side of the plot) will have lower mean than the unbiased one. This is the graphical example of the effect of not taking the number of estimated parameters into account. The correct formula for the unbiased estimate of variance is: \[\begin{equation} s^2 = \frac{1}{T-k} \sum_{j=1}^T \left( y_t - \bar{y} \right)^2, \tag{2.2} \end{equation}\] where \(k\) is the number of all independent estimated parameters. In this simple example \(k=1\), because we only estimate mean (the variance is based on it). Analysing the formulae (2.1) and (2.2), we can say that with the increase of the sample size, the bias will disappear and the two formulae will give almost the same results: when the sample size \(T\) becomes big enough, the difference between the two becomes negligible. This is the graphical presentation of the bias in the estimator.

2.3.2 Efficiency

Efficiency means, if the sample size increases, then the estimated parameters will not change substantially, they will vary in a narrow range (variance of estimates will be small). In the case with inefficient estimates the increase of sample size from 50 to 51 observations may lead to the change of a parameter from 0.1 to, let’s say, 10. This is bad because the values of parameters usually influence both point forecasts and prediction intervals. As a result the inventory decision may differ radically from day to day. For example, we may decide that we urgently need 1000 units of product on Monday, and order it just to realise on Tuesday that we only need 100. Obviously this is an exaggeration, but no one wants to deal with such an erratically behaving model, so we need to have efficient estimates of parameters.

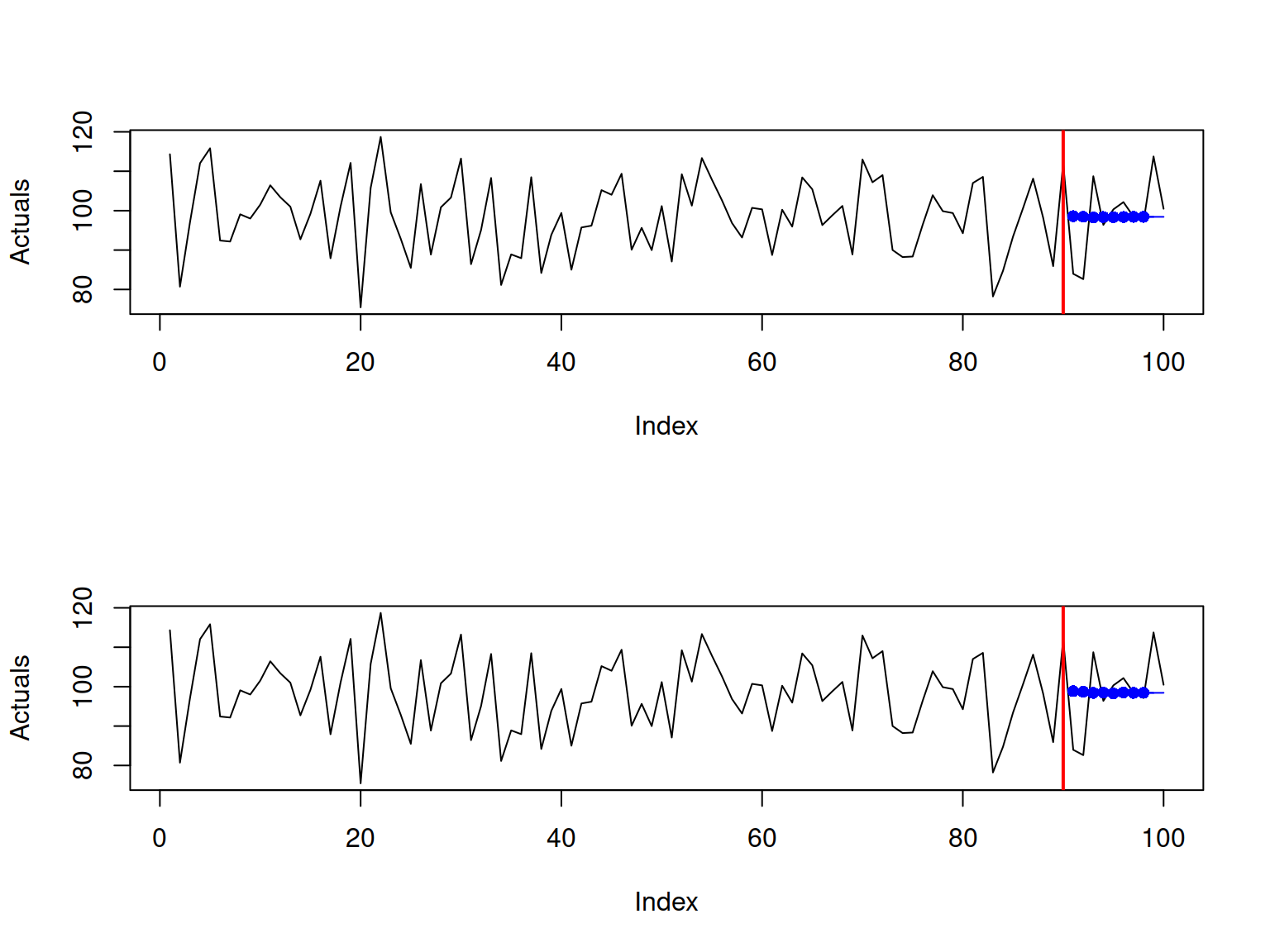

Another classical example of not efficient estimator is the median, when used on the data that follows Normal distribution. Here is a simple experiment demonstrating the idea:

mu <- 100

sigma <- 10

nIterations <- 500

obs <- 100

varMeanValues <- vector("numeric",obs)

varMedianValues <- vector("numeric",obs)

y <- rnorm(100000,mu,sigma)

for(i in 1:obs){

ySample <- replicate(nIterations,sample(y,i*100))

varMeanValues[i] <- var(apply(ySample,2,mean))

varMedianValues[i] <- var(apply(ySample,2,median))

}In order to establish the efficiency of the estimators, we will take their variances and look at the ratio of mean over median. If both are equally efficient, then this ratio will be equal to one. If the mean is more efficient than the median, then the ratio will be less than one:

options(scipen=6)

plot(1:100*100,varMeanValues/varMedianValues, type="l", xlab="Sample size",ylab="Relative efficiency")

abline(h=1, col="red")

Figure 2.21: An example of a relatively inefficient estimator.

What we should typically see on this graph, is that the black line should be below the red one, indicating that the variance of mean is lower than the variance of the median. This means that mean is more efficient estimator of the true location of the distribution \(\mu\) than the median. In fact, it is easy to proove that asymptotically the mean will be 1.57 times more efficient than median (Wikipedia, 2020b) (so, the line should converge approximately to the value of 0.64).

2.3.3 Consistency

Consistency means that our estimates of parameters will get closer to the stable values (true value in the population) with the increase of the sample size. This follows directly from LLN and is important because in the opposite case estimates of parameters will diverge and become less and less realistic. This once again influences both point forecasts and prediction intervals, which will be less meaningful than they should have been. In a way consistency means that with the increase of the sample size the parameters will become more efficient and less biased. This in turn means that the more observations we have, the better.

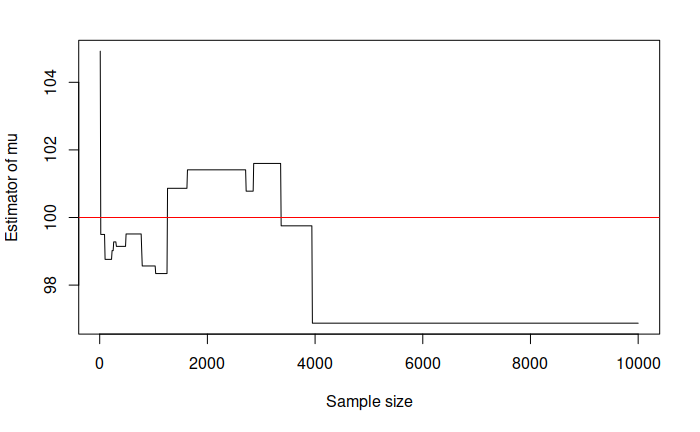

An example of inconsistent estimator is Chebyshev (or max norm) metric. It is formulated the following way: \[\begin{equation} \mathrm{LMax} = \max \left(|y_1-\hat{y}|, |y_2-\hat{y}|, \dots, |y_T-\hat{y}| \right). \tag{2.3} \end{equation}\] Minimising this norm, we can get an estimate \(\hat{y}\) of the location parameter \(\mu\). The simulation experiment becomes a bit more tricky in this situation, but here is the code to generate the estimates of the location parameter:

LMax <- function(y){

estimator <- function(par){

return(max(abs(y-par)));

}

return(optim(mean(y), fn=estimator, method="Brent", lower=min(y), upper=max(y)));

}

mu <- 100

sigma <- 10

nIterations <- 1000

y <- rnorm(10000, mu, sigma)

LMaxEstimates <- vector("numeric", nIterations)

for(i in 1:nIterations){

LMaxEstimates[i] <- LMax(y[1:(i*10)])$par;

}And here how the estimate looks with the increase of sample size:

plot(1:nIterations*10, LMaxEstimates, type="l", xlab="Sample size",ylab=TeX("Estimate of $\\mu$"))

abline(h=mu, col="red")

Figure 2.22: An example of inconsistent estimator.

While in the example with bias we could see that the lines converge to the red line (the true value) with the increase of the sample size, the Chebyshev metric example shows that the line does not approach the true one, even when the sample size is 10000 observations. The conclusion is that when Chebyshev metric is used, it produces inconsistent estimates of parameters.

Remark. There is a prejudice in the world of practitioners that the situation in the market changes so fast that the old observations become useless very fast. As a result many companies just through away the old data. Although, in general the statement about the market changes is true, the forecasters tend to work with the models that take this into account (e.g. Exponential smoothing, ARIMA, discussed in this book). These models adapt to the potential changes. So, we may benefit from the old data because it allows us getting more consistent estimates of parameters. Just keep in mind, that you can always remove the annoying bits of data but you can never un-throw away the data.

2.3.4 Asymptotic normality

Finally, asymptotic normality is not critical, but in many cases is a desired, useful property of estimates. What it tells us is that the distribution of the estimate of parameter will be well behaved with a specific mean (typically equal to \(\mu\)) and a fixed variance. This follows directly from CLT. Some of the statistical tests and mathematical derivations rely on this assumption. For example, when one conducts a significance test for parameters of model, this assumption is implied in the process. If the distribution is not Normal, then the confidence intervals constructed for the parameters will be wrong together with the respective t- and p- values.

Another important aspect to cover is what the term asymptotic, which we have already used, means in our context. Here and after in this book, when this word is used, we refer to an unrealistic hypothetical situation of having all the data in the multiverse, where the time index \(t \rightarrow \infty\). While this is impossible in practice, the idea is useful, because asymptotic behaviour of estimators and models is helpful on large samples of data. Besides, even if we deal with small samples, it is good to know what to expect to happen if the sample size increases.