I presented our current work with Anna Sroginis during my visit of IÉSEG School of Management, Lille, France last week. It was great to see my colleague and friend Sarah Van der Auweraer, and I enjoyed the discussion we had with people in her group related to forecasting and intermittent demand. You can see details […]

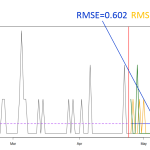

Why do zeroes happen? A model-based view on demand classification